Bitcoin prediction with machine learning for fun and profit in c

This allows them to be much more robust to changing markets. There is a new wave of startups trying to change how consumers interact with services by building consumer apps like Operator or x. You perform exploratory data analysis to find trading opportunities. On GDAX, you would also be paying a 0.

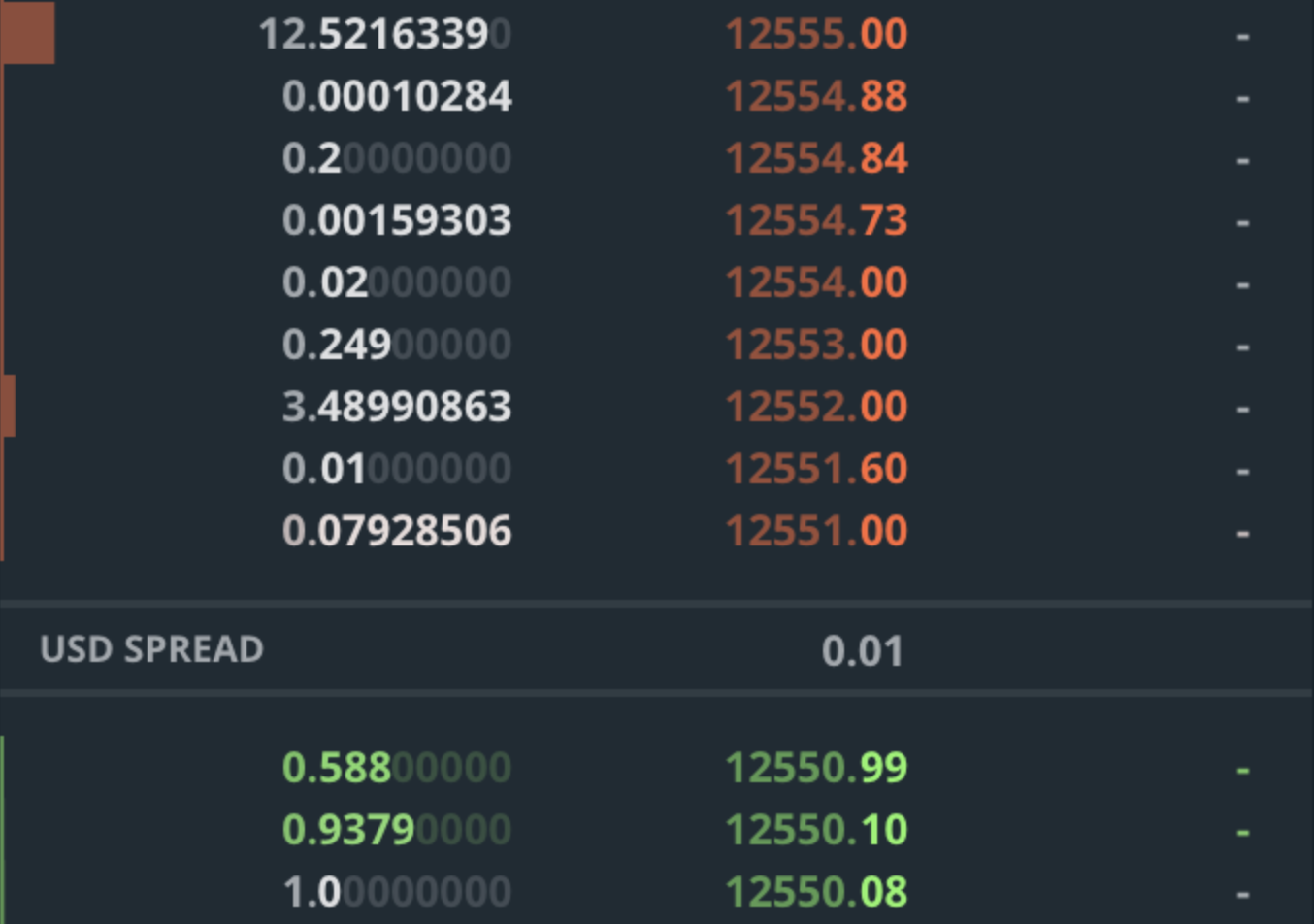

Typically, simulators ignore this and assume that orders do not have market impact. A naive thing to do is to predict the mid pricewhich is the mid-point between the best bid and best ask. Among the top news this year was a Stanford team releasing details about a Deep learning algorithm that does as well as dermatologists in identifying skin cancer. You would go to this page and see something like this:. Such problems are typically much harder to solve and an active area of research where progress is severely needed.

Having challenging environments, such as DotA 2 and Starcraft 2, to test new AI techniques on is extremely important. Why did we become post-fact? Both of these reward functions naively optimize for profit. And what could be more fun than teaching machines to play Starcraft and Doom?

There are many ways to speed up the training of Reinforcement Learning agents, including transfer learning, and using auxiliary tasks. For example, you may train your model in one framework, but then serve it in production in another one. Same for Chess, Poker, or any other game that is popular in the RL community. Similar to a BookUpdate, but a snapshot of the complete order book.

Each level of the order book has a price and a volume. OpenAI has not yet published technical details of their solution, so it is easy to jump to wrong conclusions for people not in the field. You can deploy your agent on an exchange through their API and immediately get real-world market feedback. Even as humanities baseline metrics im.

Place an order, do nothing, cancel an order, and so on. Simply how much money an algorithm makes positive or loses negative over some period of time, minus the trading fees. Beta is closely related, and tells you how volatile your strategy is compared to the market. In the other direction, RL techniques are making their way into supervised problems usually tackled by Deep Learning.

Yann LeCun took it as an insult and promptly responded the next day. You also need to compare your trading strategy to baselines, and compare its risk and volatility to other investments. Due to its dynamic graph construction similar to what Chainer offers, PyTorch received much love from researchers in Natural Language Processing, who regularly have to deal with dynamic and recurrent structures that hard to declare in a static graph frameworks such as Tensorflow.

A stream of the above events contains all the information you saw in the GUI interface. A bit more formally, the input to a retrieval-based model is a context the conversation up to this point and a potential response. Selling works analogously, just that you are now operating on the bid side of the order book, and potentially moving the order book and price down. Can Facebook cope with its new role? You would probably need to somehow enter a tournament and let your agent play there.

Ability to model other agents A unique ability of Reinforcement Learning is that we can explicitly take into account other agents. And given the severely restricted environment, the artificially restricted set of possible actions, and that there was little to no need for long-term planning or coordination, I come to the conclusion that this problem was actually significantly easier than beating a human champion in the game of Go. With that using an RNN should be as easy as calling a function, right? Two I recommend the most are:.

Because the market moves very fast, by the time the order is delivered over the network the price has slipped already. How do you choose the threshold to place an order? It looks something like this: